I'm just beginning with Python and programming, so been trying to get as much experience reading code as possible. Downloading images from URLs. Ask Question 8. # recursively download images starting from the root URL def downloadImages(url, level): # the root URL is level 0 print url global urlList if url in urlList: # prevent using. So there you have it! Two methods to convert a URL to an image using Python, OpenCV, urllib, and scikit-image. In this blog post we learned about two methods to download an image from a URL and convert it to OpenCV format using Python and OpenCV.

What I'm trying to do is fairly simple when we're dealing with a local file, but the problem comes when I try to do this with a remote URL.

Basically, I'm trying to create a PIL image object from a file pulled from a URL. Sure, I could always just fetch the URL and store it in a temp file, then open it into an image object, but that feels very inefficient.

Here's what I have:

It flakes out complaining that seek() isn't available, so then I tried this:

But that didn't work either. How to mass download from icloud. Is there a Better Way to do this, or is writing to a temporary file the accepted way of doing this sort of thing?

Daniel QuinnDaniel Quinn9 Answers

In Python3 the StringIO and cStringIO modules are gone.

In Python3 you should use:

Andres KullAndres KullI use the requests library. It seems to be more robust.

SauravSaurav

SauravSauravFor those of you who use Pillow, from version 2.8.0 you can:

or if you use requests:

References:

Use StringIO to turn the read string into a file-like object:

For those doing some sklearn/numpy post processing (i.e. Deep learning) you can wrap the PIL object with np.array(). This might save you from having to Google it like I did:

Unlike other methods, this method also works in a for loop!

select the image in chrome, right click on it, click on Copy image address, paste it into a str variable (my_url) to read the image:

open it;

The arguably recommended way to do image input/output these days is to use the dedicated package ImageIO. Image data can be read directly from a URL with one simple line of code:

Many answers on this page predate the release of that package and therefore do not mention it. ImageIO started out as component of the Scikit-Image toolkit. It supports a number of scientific formats on top of the ones provided by the popular image-processing library PILlow. It wraps it all in a clean API solely focused on image input/output. In fact, SciPy removed its own image reader/writer in favor of ImageIO.

Not the answer you're looking for? Browse other questions tagged pythonpython-imaging-library or ask your own question.

I am creating a program that will download a .jar (java) file from a web server, by reading the URL that is specified in the .jad file of the same game/application. I'm using Python 3.2.1

I've managed to extract the URL of the JAR file from the JAD file (every JAD file contains the URL to the JAR file), but as you may imagine, the extracted value is type() string.

Here's the relevant function:

However I always get an error saying that the type in the function above has to be bytes, and not string. I've tried using the URL.encode('utf-8'), and also bytes(URL,encoding='utf-8'), but I'd always get the same or similar error.

So basically my question is how to download a file from a server when the URL is stored in a string type?

Bo MilanovichBo Milanovich7 Answers

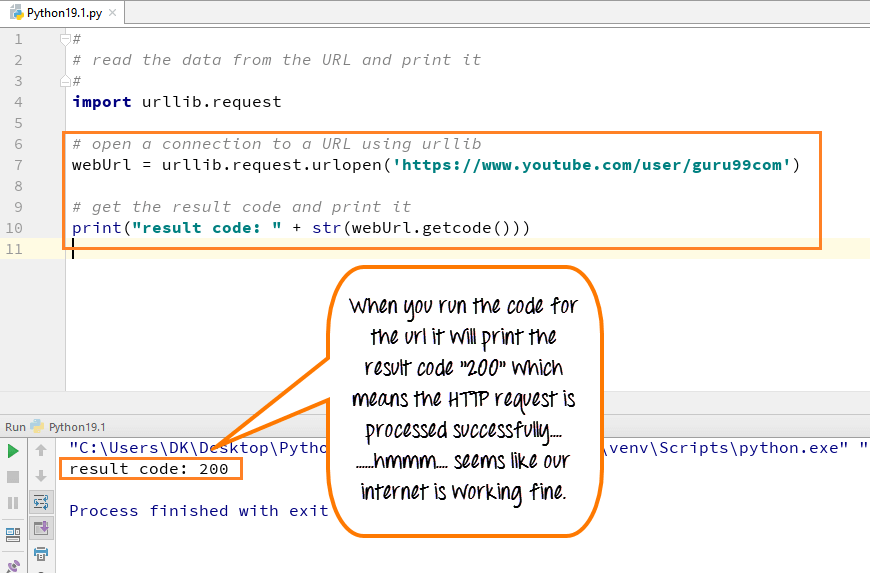

If you want to obtain the contents of a web page into a variable, just read the response of urllib.request.urlopen:

The easiest way to download and save a file is to use the urllib.request.urlretrieve function:

But keep in mind that urlretrieve is considered legacy and might become deprecated (not sure why, though).

So the most correct way to do this would be to use the urllib.request.urlopen function to return a file-like object that represents an HTTP response and copy it to a real file using shutil.copyfileobj.

If this seems too complicated, you may want to go simpler and store the whole download in a bytes object and then write it to a file. But this works well only for small files.

It is possible to extract .gz (and maybe other formats) compressed data on the fly, but such an operation probably requires the HTTP server to support random access to the file.

Far cry 2 download torrent file. • Destructible environment No more obstacles: Everything is breakable and alterable, even in Multiplayer mode.

Oleh PrypinOleh PrypinI use requests package whenever I want something related to HTTP requests because its API is very easy to start with:

first, install requests

Python Download Image From Url Requests To Access

then the code:

Ali FakiAli FakiPython Download Image Url

I hope I understood the question right, which is: how to download a file from a server when the URL is stored in a string type?

I download files and save it locally using the below code:

Here we can use urllib's Legacy interface in Python3:

Python Open Image From Url

The following functions and classes are ported from the Python 2 module urllib (as opposed to urllib2). They might become deprecated at some point in the future.

Example (2 lines code):

Python Download Image From Url Requests To Server

You can use wget which is popular downloading shell tool for that. https://pypi.python.org/pypi/wgetThis will be the simplest method since it does not need to open up the destination file. Here is an example.

Yes, definietly requests is great package to use in something related to HTTP requests. but we need to be careful with the encoding type of the incoming data as well below is an example which explains the difference